Getting to know Docker

Docker⌗

This post is more of an introduction to Docker thing, it is just a high level overview of what docker actually is and how the environment runs. Docker is a great tool which reduces complexity and provides flexibility in shipping an application.

It is an open-source project that provides the deployment of software applications inside containers by providing an additional layer of abstraction and automation of OS-LEVEL Virtualization.

The industry uses VM’s to run software applications which runs them inside a guest operating system, which runs on virtual hardware. VM’s provide full process isolation for applications, but does have a high computational overhead spent virtualizing hardware for a guest OS.

Process : A process is an instance of an executing program. Processes are managed by the operating system kernel which is in charge of allocating resources and managing process creation and interaction.

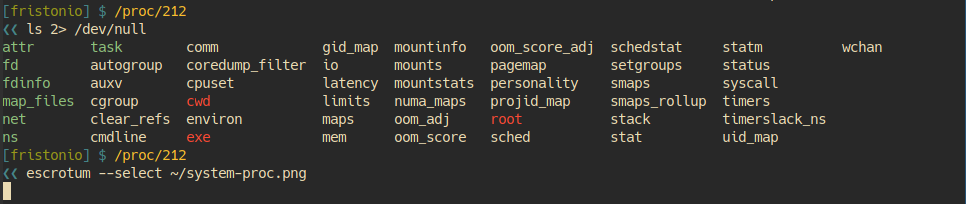

Each process in a linux kernel lies in /proc directory and since everything in a linux is a file all the files realted to the process are stored in this directory childs (/proc/PID(process))

Contianers :

The new kernel features(namespaces and cgroups) lets us isolate processes from each other, they let us pretend that we have something like a virtual machine. These feature are what container represents.

Cgroups and Namespaces⌗

Cgroups and Namespaces are what together create a way to isolate a process or a group of process to help create this abstraction we call a container.

- Cgroups or control groups are used to limit the resources of a group of processes(go to

/sys/fs/cgroupsto see what cgroups are administered)

Using cgroups we can limit the amount of memory(resource in this case) the namesace can use.

Namespaces isolate what group of process have acccess to within the system. It provides the isolation needed to run multiple containers while giving each what appears like it’s own environment. There are different type of namespace.

-

PID Namespace : It gives a process and its children their own view of a subset of processes in the system. It sort of represent a mapping table so that whenever a process in a PID namespace asks the kernal for a list of processes, the kernal looks in the mapping table and if the process exists mapped ID is used instead of real ID.

-

NET : Netowrking namespace gives processes their own network stack, they do not have access to real network interfaces they just posses virtual connections, it actually lets us run programs on any ports without conflicting.

-

MNT : Mount Namespace gives the process contained within it their own mount table which lets us mount and unmount filesystems without affecting host filesystems. This is how a process thinks it’s running on some other operating system then host OS by swapping the filesystem the container sees.

-

USER : Ther user namespaces maps the uid a process sees to a different set of uids on the host. So we can essentially map the container’s root UID to an arbitrary(generally unpriviliged) uid on the host. So what we are essentially doing is giving a process root priviliges inside a container while for the host it does not have that.

-

IPC : This isolates various inter-process communication mechanishms.

-

UTS : It gives the processes their own views of system’s hostname and domain-name.

A program called unshare in linux can be used to create new namespaces.

Docker Architecture⌗

runC⌗

Docker internally uses runC as it’s container run-time, which is a portable container runtime that is a CLI tool to spwan and run containers using the above kernel features. runC was a docker development but was then donated to OCI(Open Container Initiative) which creates standard around container format. OCI currently provides 2 container specification runtime specification and image-format specification.

containerd also a tool, uses runC to spawn containers while also managing the entire lifecycle of a container.

Docker Engine⌗

Docker engine is an API for users to manage the container needs. Docker Engine deals with the file system, network and creates an easy way to build, maintain and deploy containers. Docker is a client server application with a CLI tool that interacts with the docker deamon over a simple REST API.

To create a container we need a root-filesystem and some configuration. The concept of docker images helps us here. It is a layered set of these root file systems and acts as a blue print for the container.

To create these image a configuration file for the Docker is writterned known as Dockerfile which gives docker the instruction on how to build these layers.

Each instruction in the Dockerfile is a step in the build phase and also a seperate image layer. Each image layer is just a diff of the image layer before it!.

A typical example of a Dockerfile

FROM ubuntu:xenial

WORKDIR /usr/src/app

RUN apt-get update \

&& apt-get -y install curl git nodejs \

&& cp /usr/bin/js /usr/bin/node \

&& chmod +x /usr/bin/node

COPY source .

CMD node index.js

- FROM : Base image used for subsequent instruction

- WORKDIR : Working directory used when the image is built

- RUN : Set of commands to be run when the image is being built.

- COPY : To copy files from host/context into the image when being built.

- CMD : Command to run when the container starts.

A docker image is an immutable, ordered collection of root filesystem layers. An image layer is therefore a modification referenced by an instruction in a Dockerfile used to build a complete image. Since each image layer is a diff of the layer below it, implies that the image size is small but overhead increases in recording the diffs.

Storage drivers :⌗

Docker image layers are immutable(read only) except for the top most layer, this writable layer is the actual container layer and any data writtern to this layer will be removed when the container exits.

Whenever a write to container data occurs the container efficiently look to see if the file is available, layer by layer, from a top down approach. If the file exists docker caches it for later interaction ant then copy the file up to the writable container layer. This way only a diff is needed and this keeps write effecient.

The storage drivers are in charge of the container layer writes and how images are stored in the host.

Docker Compose⌗

Docker compose is a tool that allows us to create a more complex application, it allows us to define the application setup using a yaml file.We can manage environment variables, startup order, volumes, the network and much more.

DOCKER CLI⌗

Run docker run hello-world to check if the docker installation worked correctly.

docker pull [image]

pull command fetches the image specified from the Docker Registry and saves it on the system. To view a list of all the images on the system use docker images.

docker run [image]

This command runs a docker container based on the image. If we do a docker pull busybox followed by docker run busybox nothing happens on the screen but in background a lot happened, the docker client finds the image loads up the container and then runs a command in that container. But in this case we didn’t provide any command and thus the container exitted silently.

docker ps command shows all the containers that are currently running. docker ps -a on the other hand shows all the container even those that exitted in past. Since we can still see the remnants of the container even after we’ve exited, leaving these stray conatiners eats up the disk space hence we need to remove them using docker rm [container-id].

docker rm $(docker ps -a -q -f status=exited) removes all the containers with status exit.

docker rmi [image] removes the image that we no longer need.

docker run -it [busybox sh] : run command with it flag attaches us to an interactice tty in the container.

-d flag with run detach the docker container from the current running terminal.

Running a container simply using docker run does not publish the ports of the container. To expose the ports we use -P flag with docker run. --name flag spefies the name for the container that can be used to reference it.

docker port [CONTAINER] : shows all the ports of the container and the actual ports they are mapped to.

Docker Images⌗

Docker images are the basis of the docker containers. An Image is a read only blueprint with the instructions to build the container. One can either create his own docker image or can use image from docker registry. We can build our own docker image using Dockerfile. Dockerfile holds those blueprint instruction which are built to create the image. Each image then runs as a container.

Each instruction in a Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only those layers which have changed are rebuilt.

docker build: build the docker Image from the Dockerfile (Provide the path of the directory containing Dockerfile and a -t flag).docker images: View available docker imagesdocker run: to run the container built from Dockerfile in the current directory.

We can add our own images in docker registery for example in Docker-Hub.

Docker Network⌗

Docker network is essential part of docker in case we need the docker containers to communicate between each other. By default docker have three network installed which can be viewed using docker network ls.

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c818ff7e7110 bridge bridge local

a15e946c7de4 host host local

788a88744a36 none null local

The bridge network is the network in which containers run by default. To view which containers are running in the network use command docker network inspect [NETWORK NAME].

docker network inspect bridge gives the details about the network bridge incuding the details like subnet which the network holds and containers running in the network.

$ docker network inspect bridge

[

{

"Name": "bridge",

"Id": "8022115322ec80613421b0282e7ee158ec41e16f565a3e86fa53496105deb2d7",

"Scope": "local",

"Driver": "bridge",

"IPAM": {

"Driver": "default",

"Config": [

{

"Subnet": "172.17.0.0/16"

}

]

},

"Containers": {

"e931ab24dedc1640cddf6286d08f115a83897c88223058305460d7bd793c1947": {

"EndpointID": "66965e83bf7171daeb8652b39590b1f8c23d066ded16522daeb0128c9c25c189",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

}

}

]

So thus to communicate with a docker container we can use its IP address from inside the subnet. But since this IP may change we cannot use it in our application statically. Also since the bridge network is shared by all the containers, this method of communicating is not secure.

Docker provides solution for the above problem using docker networks we can create out own docker network using docker network create [NETWORK NAME]. The network create command creates a new bridge network, which is what we need. We can also create other types of network apart from bridge network.

Now to let these containers communicate with each other we launch them inside same network using --net [NETWORK NAME] flag. Also --name [CONTAINER NAME] flag is also provided when running the containers to let other containers identify them. When these flags are used docker creates an entry in /etc/hosts all by itself specifying name of the container corresponding to its IP, so that we can access the container using its name.

$ docker run -dp 9200:9200 --net my_network --name es myImage

# Silently launch the container as a deamon with port 9200 exposed in the network my_network

# with the name es

$ docker run -it --rm --net my_network image2 bash

root@53af252b771a:/ cat /etc/hosts

172.18.0.3 53af252b771a

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.18.0.2 es

172.18.0.2 es.my_network

root@52af252b771a:/ curl es:9200

{

[RESPONSE AT THAT PORT]

}

Earlier version of docker used Docker links to communicated with the bridges which are to be deprecated in the future releases of the docker.

Docker Compose⌗

docker-compose is one of the docker open source tool, it is a tool for defining and running multi-container Docker applications. It parses a file named docker-compose.yml to set up the environment.

A simple docker-compose.yml file looks something like :

version: "2"

services:

es:

image: elasticsearch

web:

image: my_web

command: python app.py

ports:

- "5000:5000"

volumes:

- .:/code

To run use docker-compose up in the directory containing docker-compose.yml. To run in detached mode just use -d flag with the above command.

docker-compose ps will show all the running containers.

docker-compose stop to stop the running containers.

Under the hood docker-compose itself creates a new bridge network and attaches services to it. The new network can be seen using docker network ls.

But in this case if we look at the /etc/hosts it contains the IP we were using but not the name es corresponding to it rather it contained some random string. But if we see the application if works fine if we used es this is because docker maintains an internal DNS server which resolves the hostname itself.

This way docker-composer easily and cleanly manages the container initialization and networking all by itsef under the hood.

Aside from the fascinating idea of OS level virtualization that docker is built on it struggles to provide the best possible experince. Though the community is very helpful the system is still far from being stable and runs into a hell lot of deadlocks. I hope they fix the issues soon.